Moolya Software Testing Capabilities

Exploratory Testing | Automation | Performance | Security | Accessibility | Managed services

- Someone has a business idea to solve a pain point across customers.

- That someone wants to build a product that solves the identified pain.

- That someone builds a product hiring product and engineering teams.

- That someone wants an internal feedback system about the product.

- That someone has many questions in mind.

- That someone wants to know “Are we ready to invest in marketing spend? “

- That someone wants to know if customers have obstacles in using the product.

- That someone wants to know if customers’ pain is really solved through the product.

- That someone hopes everyone in the company evolves based on the feedback.

- The feedback loop to evolve is called Testing.

In order to give the right feedback,

- Ask questions to understand the context

- Validate the understanding

- State the purpose

- Validate your assumptions.

- Who is that someone in your context ?

- What kind of feedback they are looking for?

- What kind of feedback is relevant ?

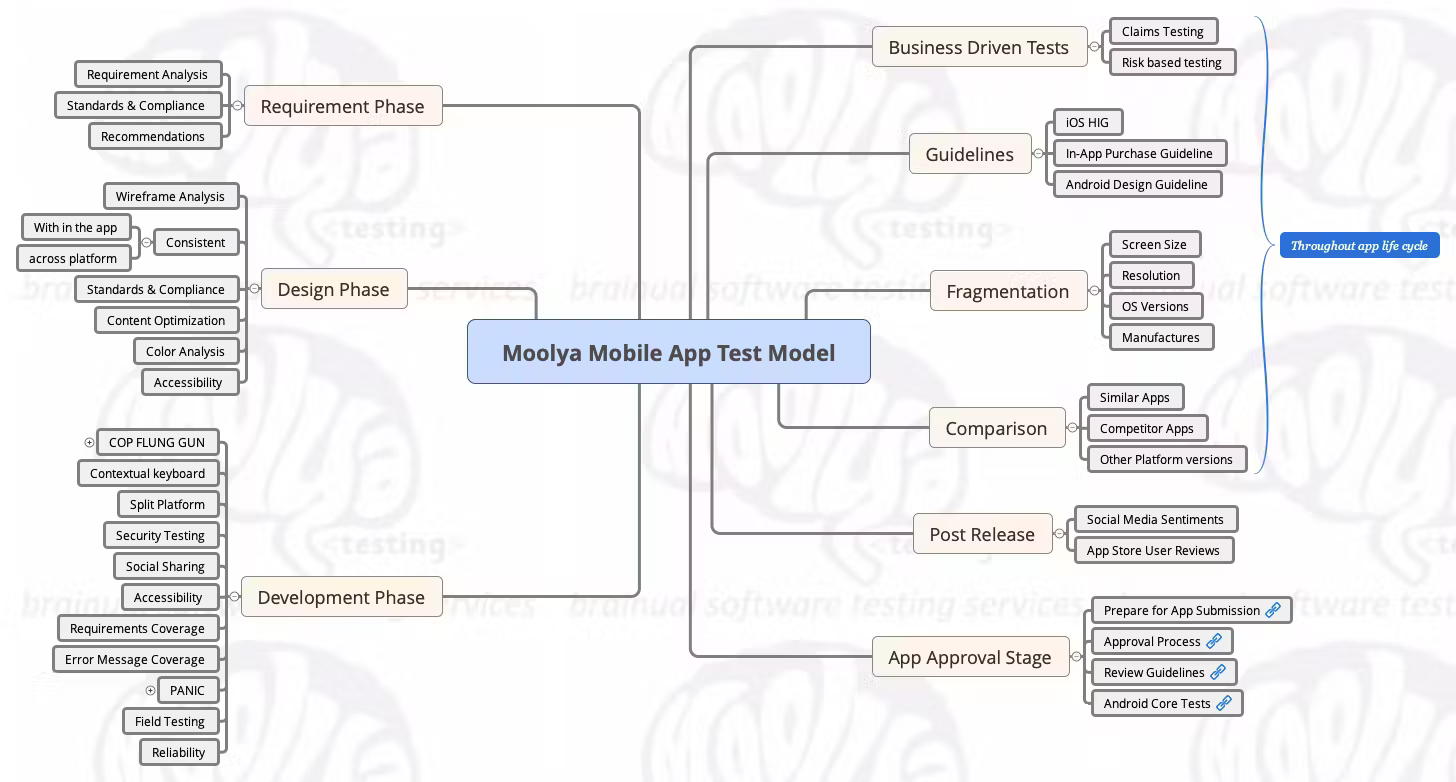

Building a useful mental model of the application under test, including what value it should provide and what risks could threaten that value. You can call this a strategy. Compare it with playing cricket, one don’t apply same strategy in a T20 game and test cricket. Strategy changes based on the context, one don’t apply same strategy while chasing 200 runs, while setting a target on a bowler friendly pitch. The context determines the strategy.

Strategy - is a set of guidelines that would help us to solve a problem. In our case we try solving a testing problem.

You can have a generic test strategy, but since you don’t test generic products, but only specific products, it will become better when it is made specific to what you are testing at the moment.

Your test strategy should give answers to

- Testing Objective

- Business / Release objective

- Source of Information

- Coverage

- Device / Browser / OS coverage

- Quality Criteria

- Feature Coverage

- Test execution - Like ET or Scripted or automated

- Deliverables

Test execution (which might be automated entirely, partly, or not at all), analyzing the results to determine if there’s a problem, how severe it might be, or otherwise answer stakeholder questions.

Common mistake everyone makes is jump directly to either write tests or execute tests without any strategy in place.

The visibility part

Test reporting that clearly communicates the impact of the bug, and how to quickly reproduce it. Your test reports should influence people to take the right decisions.

Test Value

There are three important stakeholders for Testers. The Business, The Product Team (PO and BA), and The Tech (CTO, VP, Dev)

Our goal as Moolya is to be able to cater to all these stakeholders by structuring our test coverage to capture value for all these stakeholders. A bug we find may not be of interest to the tech team but we ferry it across to Product Teams to decide if it needs to be fixed.

To do that, we need to be able to understand their context really well, build strong relationships with them, and influence them with the right information helping the product and the people working on it, succeed.

To do that,

- We ask the right questions at the right time, politely and fearlessly

- We are tied to the purpose

- We do this not sporadically but in a disciplined manner

- We communicate really well

- We are focused on test coverage

- We are mission-focused

- We say “I don’t know” when we have to

- We care

- We don’t do things to please people

The above forms a fundamental to test value from Moolya to stakeholders who hire us.

Also in some contexts, we need to provide more PO value and in others more tech value.

- Product value: Feedback to BA and PO on the requirements and usefulness to the business.

- Tech value: Feedback to developers on how they can prevent these issues and reporting bugs and observations by deeper technical investigation than surface-level issues.

- Test value: Implementing different coverage models.

- Customer's customer value: Putting yourselves in the shoes of the users and finding things that the users want and articulating the feedback in a credible way.

Test Coverage

- “Cover” means “Enclosing a certain area”

- Software, no matter how small - is always complex to test.

- Complete testing is impossible for any software.

- We can’t cover everything.

- Testing is sampling.

- Good testing =Right sampling.

- Right or wrong is determined by the context.

- Test techniques, Heuristics and oracles. = Ways of sampling.

- Modelling= Representing complexity in a simpler form.

- Tests = Experiments

- Test Coverage =

- Skills leading to

- Understanding the context, leading to

- Modelling, leading to

- Techniques, leading to

- Setup, leading to

- Testing, leading to

- Observations, leading to

- Interpretation of results, leading to

- Reports, influencing people

Learn about the product, read user reviews

- Someone has a business idea to solve a pain point across customers.

- That someone wants to build a product that solves the identified pain.

- Tat someone builds a product hiring product and engineering teams.

- That someone wants an internal feedback system about the product.

- That someone has many questions in mind.

- That someone wants to know “Are we ready to invest in marketing spend? “

- That someone wants to know if customers have obstacles in using the product.

- That someone wants to know if customers’ pain is really solved through the product.

- That someone hopes everyone in the company evolves based on the feedback.

- The feedback loop to evolve is called Testing.

In order to give the right feedback

- Ask questions to understand the context

- Validate the understanding

- State the purpose

- Validate your assumptions.

- Who is that someone in your context ?

- What kind of feedback they are looking for?

- What kind of feedback is relevant ?

- Learn about the customer,

- Target audience,

- Business model,

- Competitors & other products of customers

Creating a knowledge base of product, domain & questions that were asked to tech/prod team.

- Domain

- Technology

- Questions

Define about What to Test, How to Test, Test Documentation and Test Reporting

Building a useful mental model of the application under test, including what value it should provide and what risks could threaten that value. You can call this a strategy. Compare it with playing cricket, one don’t apply same strategy in a T20 game and test cricket. Strategy changes based on the context, one don’t apply same strategy while chasing 200 runs, while setting a target on a bowler friendly pitch. The context determines the strategy.

Strategy - is a set of guidelines that would help us to solve a problem. In our case we try solving a testing problem.

You can have a generic test strategy, but since you don’t test generic products, but only specific products, it will become better when it is made specific to what you are testing at the moment.

Your test strategy should give answers to

- Testing Objective

- Business / Release objective

- Source of Information

- Coverage

- Device / Browser / OS coverage

- Quality Criteria

- Feature Coverage

- Test execution - Like ET or Scripted or automated

- Deliverables

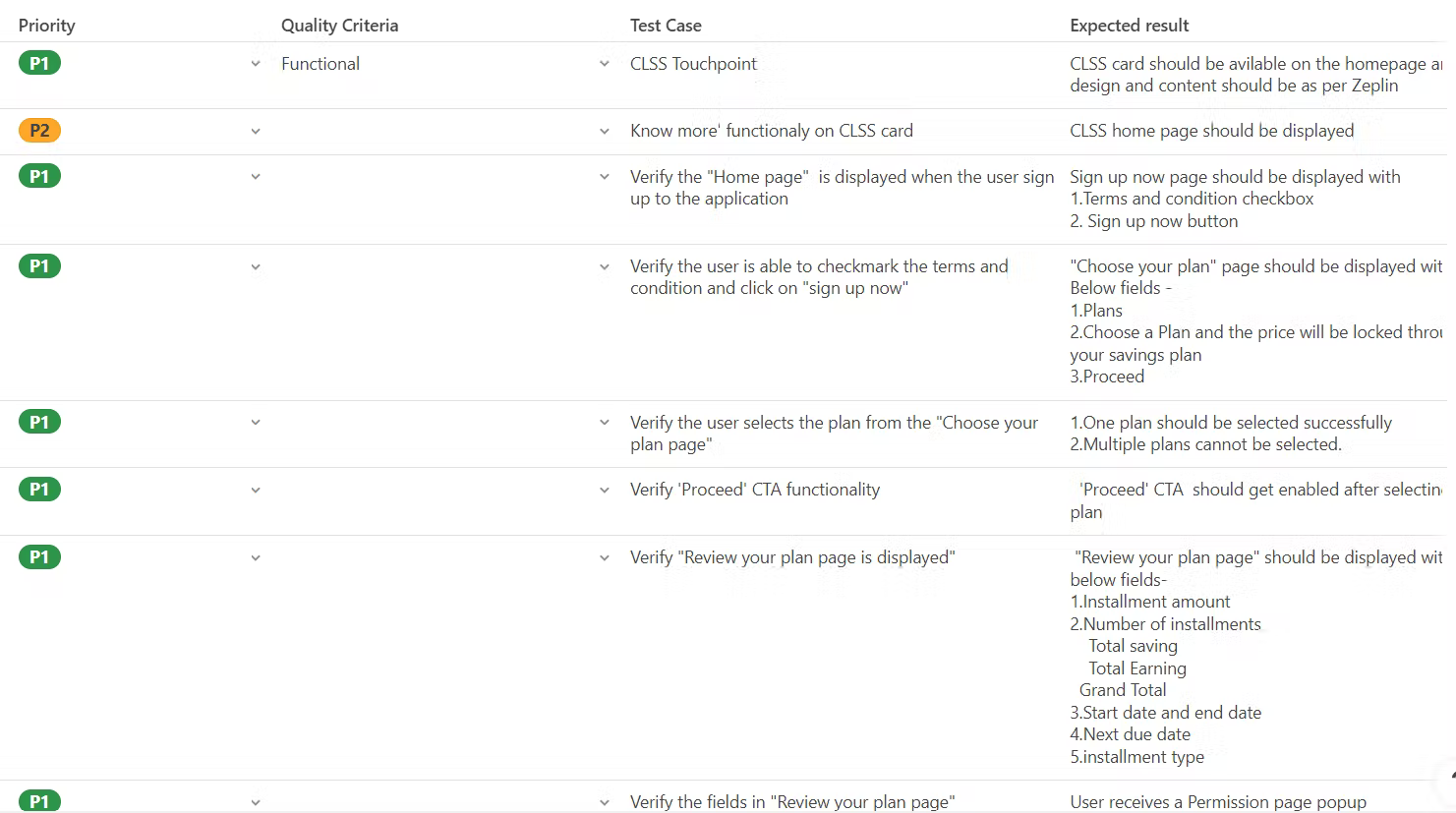

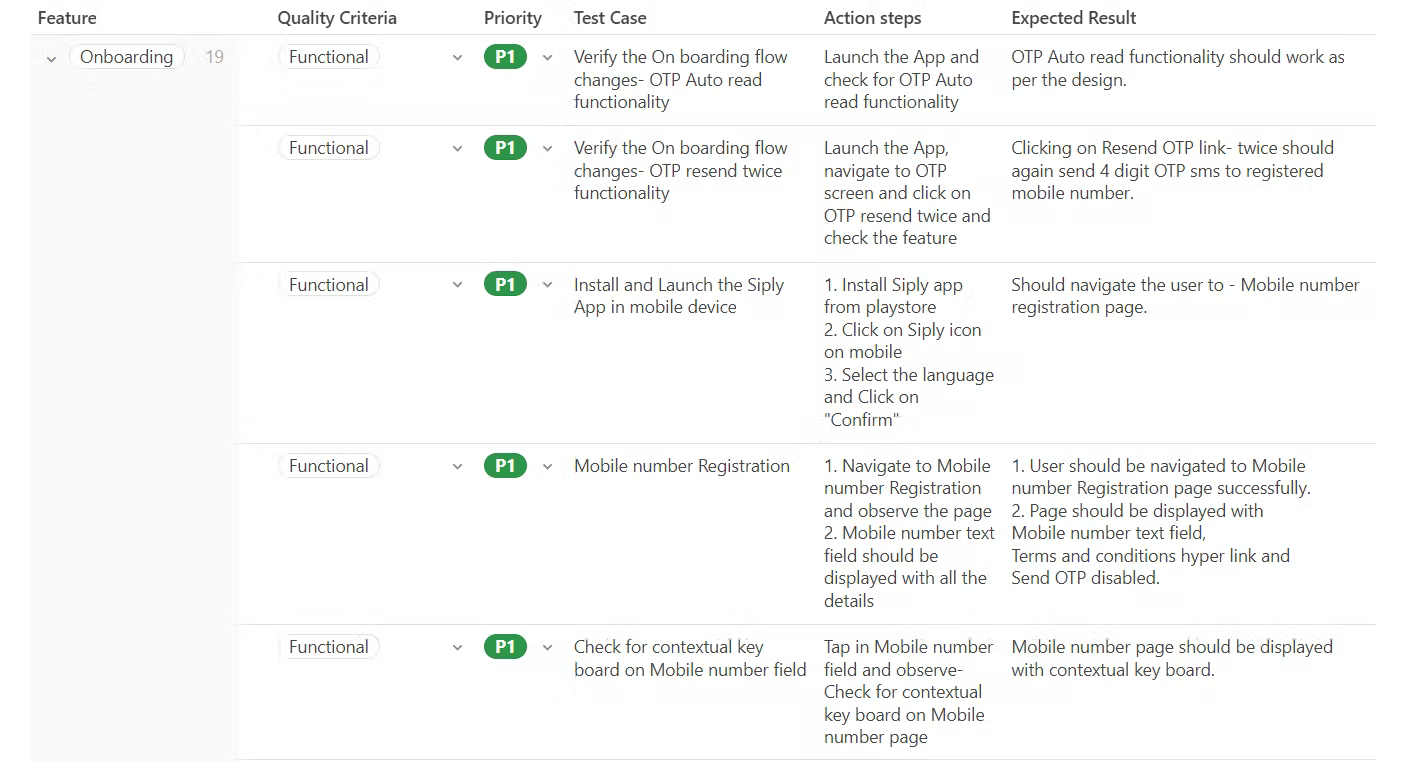

● What to Test

○ Know the Mission

○ Model the System

○ Identify Platform/device

○ Identify Test Data

○ Identify Risks

○ Who will Test?

● How to Test

○ Identify Features/Users involved

○ Test Planning

○ Effort and Time Evaluation

○ Setup Test Environment

● Test Documentation

○ Test Artifacts

○ Reviews & Inspections

○ Test Closure Reports

○ Weekly/Daily Status Reports

- Heuristics and Oracles

- Test Techniques

Test execution (which might be automated entirely, partly, or not at all), analyzing the results to determine if there’s a problem, how severe it might be, or otherwise answer stakeholder questions.

Common mistake everyone makes is jump directly to either write tests or execute tests without any strategy in place

● Verify Testability

● Prioritize the features

● Test Aggressively

● Record test observation

● Report Bugs

● Follow Bug Life Cycle

● Regression Testing

Test reporting that clearly communicates the impact of the bug, and how to quickly reproduce it. Your test reports should influence people to take the right decisions.

● Test Execution Dashboard

- Feature Coverage

- Device Coverage

- Test Ideas/Charters

- Bugs, Recommendations

- Defined KRA & KPI for Testing Team

- Escalation Matrix

- Monthly Internal Review Meeting

- Quarterly Review Meeting with stakeholders

Requirement gathering and analysis

o Understanding the environment, platform, and dependencies if any

o Feasibility analysis

- Test case analysis for automation

- Analyzing and defining the test cases for automation (Validate automatable/ non automatable tests)

- Categorizing test cases based on Priority/ Severity

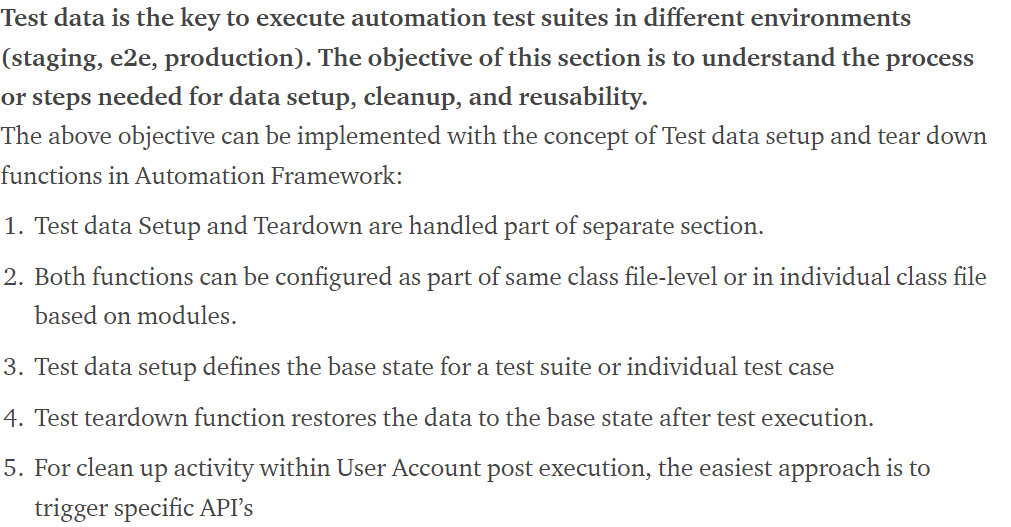

- Understanding of test data

- Infrastructure readiness

- Ensuring dedicated test environment is available for authoring the test scripts/ execution of tests

- Test execution environment setup readiness

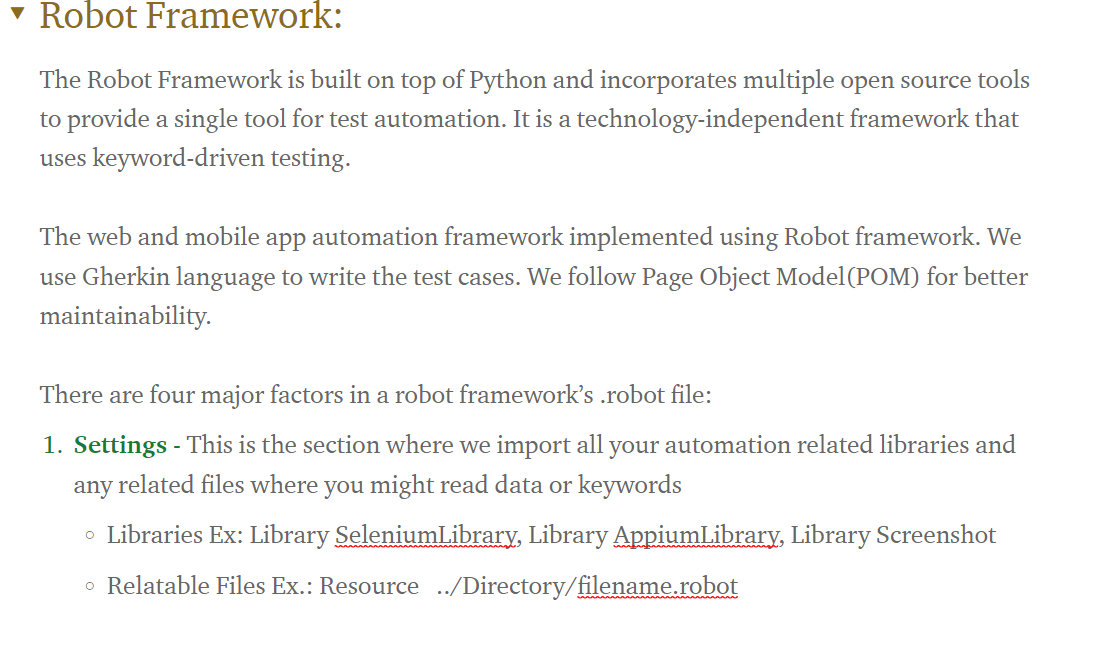

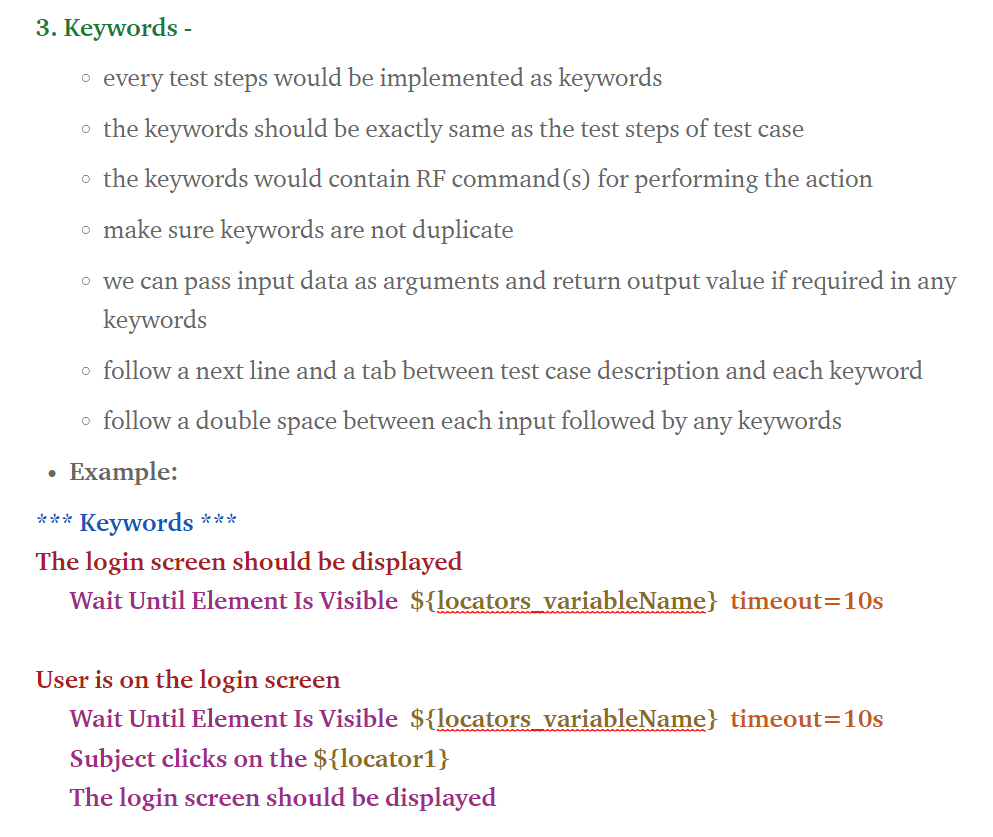

- Creation/ Customization of test automation framework based on the application structure, business mode or any specific requirements (e.g. BDD approach)

- Process defining for branching strategy, code review, and issue logging, and retrospection

- Defining deliverables and success criteria to achieve ROI in automation from an early stage

Robust code structure, high reliability

● All generic controls are available and reusable for web and mobile platforms

● Centralized page object access – Low maintenance

● Cross browser, platform support

● Extendibility – Framework can be extended with multiple cross platform

● Data management from external file (Excel, INI file support)

● Test report dashboard – Detailed suite wise and module wise report for better analysis

● Easily integrates with Jenkins pipeline process

- Better testcase and test data management

- Structured and defined approach of accessing test objects, test data

- Consistency, reusability and easy maintenance

- Standard approach of writing the test cases across the project

- Learning curve to get accustomed with framework is high initially which can be tackled with prior training

● Based on the requirement and the complexity of the application, we require 2-4 weeks to setup or customize the automation framework, this initial investment will pay off big time when we look at long term automation cycle and maintenance

● During the project initiation we will set the strategy and right expectation, and get the number of automation engineers required for the project

1.1 Purpose

The purpose of this document is to provide a technical proposal with an estimate for the vulnerability assessment and penetration testing of web applications deployed by Moolya.

1.2 Scope

The scope of this document is limited only to the technical proposal of the below cyber security works.

Penetration Testing GREY BOX

The scope of work includes grey box penetration testing of an isolated instance of the specified application or service. Fixed vulnerabilities will be verified if the fix is made available within the warranty period. Warranty period for this testing will be 1 month after releasing the final report. Effort and cost estimation of grey box penetration testing is provided.

1.3 Abbreviations & Definitions

1.HTTP- Hypertext transfer protocol

2.API- Application programming interface

1.4 References

1.5 Overview

This proposal covers different test approaches required for satisfying the entire assessment scope. These approaches are:

1. Security Architecture Study

2. Classify Security Testing

3. Threat modelling

4. Test Planning

5. Test execution

6. Report Generation

7. Retest for the fixed bugs

2.1 Security architecture study

For making an application hack proof, the security requirements need to be addressed at the very first stage of every software design. The architecture should have the capability to prevent external attacks and so architecture should be designed in such a way. In this phase, application will be analyzed for possible threats at the architecture level. The security goals and security compliance objectives will be studied during the phase along with understanding and analyzing the requirement of the application under test. Some of the security perspective checkpoints are listed below

1. Economy of mechanism

2. Fail-safe defaults

3. Complete mediation

4. Open Design

5. Separation of privileges

6. Least privileges

7. Least common privileges

8. Psychological Acceptability

2.2 Classify security testing

All the system setup information used for developing the system/software such as network, operating system, technology, hardware etc. will be collected, studied and analyzed.

2.3 Threat Modelling

After collecting, studying and analyzing the data based on the above steps, a threat profile will be created.

2.4 Test planning

Based on the identified threats, vulnerabilities and security risks, a test plan will be created to address all those issues.

2.5 Test case execution

Performs the vulnerability assessment penetration testing based on the plan and gathered information.

2.6 Reports

A detailed report of the results will be prepared with proper mitigation plans. There will be two types of report

1. Technical Report: It contains all the vulnerabilities identified with how to fix description in it so that a developer can fix the issues using the report.

2. Management Report: It contains bug summary with graphical representations.

2.7 Re-testing

Once the bugs are reported and fixed by developers, then retesting is required to verify whether those bugs still exist. The status will be updated in the reports.

3.1 Project Specification

#Item - Description

1. URL - _______________

2. Tech Stack -Java, ReactJS, JavaScript, Erlang, MySQL

4.1 Effort Web application

Item Effort (Man Hours)

1 Information Gathering 1.1 Determine highly problematic areas of the application

1.2 Construct business logic and data flow

1.2.1 Integrity of the workflow

1.2.2 Users can’t bypass or skip steps

1.2.3 Users can’t perform privileged activities without authorization

2 Checking for Login page 2.1 Session management

2.2 Brute forcing

2.3 Privilege escalation

2.4 Password complexity

3 Perform automated and manual crawling 4 Conduct tests and discover vulnerabilities 4.1 Test for business logic

4.2 Check for version-specific attacks

4.3Form validation Testing

4.4 Security Vulnerability Testing

4.5 HTTP Method(s) Testing

4.6 User Session Testing

4.7 Directory Browsing

4.8 Authentication Testing

4.9 Configuration Management Testing

4.10 Data Validation Testing (All Injections)

4.11 Client-side testing

5 Backend Services Testing 5.1 Broken Object Level Authorization

5.2 Broken User Authentication

5.3 Excessive Data Exposure

5.4 Broken Function Level Authorization

5.5 Injection

6 Report Total (Hours) —

4.2 Effort Mobile application

Item Effort (Man Hours)

1 Information Gathering 1.1 Determine highly problematic areas of the application

1.2 Construct business logic and data flow

1.2.1 Integrity of the workflow

1.2.2 Users can’t bypass or skip steps

2 Assessment/Analysis 2.1 Local File Analysis

2.2 Static Analysis

2.3 Dynamic Analysis

3 Inter-Process Communication 3.1 Endpoint Analysis

3.2 Content Providers Intents

3.3 Broadcast Receivers

3.4 Activities

3.5 Services

4 Exploitation 4.1 Test for business logic

4.2 Check for version-specific attacks

4.3 Security Vulnerability Testing

4.4 User Session Testing

4.5 Authentication Testing

4.6 Configuration Management Testing

5 Backend Services Testing 5.1 Broken Object Level Authorization

5.2 Broken User Authentication

5.3 Excessive Data Exposure

5.4 Broken Function Level Authorization

5.5 Injection

6 Report Total (Hours)

Total (Hours) —-

4.3 Effort Server

Item Effort (Man Hours)

1 Information Gathering

2 Vulnerability Scanning

3 Exploitation

4 Gaining access & privilege evaluation

5 Manual pen-testing

6 Automated tool based pen-testing

7 Privilege Escalations

8 Post exploitation reconnaissance

9 Pivoting threats

10 Compromise Remote users

11 Auditing

12 Report Total (Hours) —

It Involves

- a.Understanding the metrics

- b.Identifying right tool for measurement

- c.Outlining test profiles & designing the tests

- d.Analyzing test results

- e.Providing recommendations for improvements

- f.In case of using a monitoring tool

- i.Scheduling tests

- ii.Understanding test agents

- iii.Monitoring setup

- iv.Test run verifications

- g.Reporting

Accessibility - Compliance Levels

20 Checks | 14 Checks | |

Level A | Level AA | Level AAA |

Minimum | General Standard | Stringent |

Not great of convenience | Balance between development | People with disabilities |

with people with disabilities | and ease of use for people | can easily navigate |

with disabilities | through |

- Finding a balance of using components during the development cycle

- Accessibility enabling with inbuilt components of Mobile apps (Android & iOS)

- Understanding accessibility over time and review design components to make the app accessibility friendly

Sample Projects Data > Case studies and documents

Customer Onboarding Workflow

Product Updates At Moolya

At Bugasura, We look at user feedback and reviews to provide pure value and serve them the best we can.

We found out that our users were having trouble with Jira sync. And last week's sprint was all about it.

We got this Big Big Jira Update for you !

Bugasura and Jira are now in complete sync

- Mapping Fields: Map both the system and custom fields between Bugasura and Jira.

- Auto push: Sync all issues in one click

- Pull feature: Changes made to issue details inside Jira, will also reflect in Bugasura.

- Auto-sync: Issue details, comments, and attachments are all synced automatically when an already synced issue is changed.

A HOME TO THE CREATIVE TESTER Promoting Passion for Testing.

At Moolya, you don’t ever ask for permission to do something good. We are building a culture where testers support each other to grow while having fun mastering their craft.

We promote original - lateral thinking and inspire our people to be leaders in the testing space, not just leads by designations. These brews innovation in our labs where our testers come together to build a suite of utilities to give shape to their craft and perform tasks that guarantees value in the projects they contribute

Case Studies

With NFTs buzzing around the markets like busy honey bees, Lysto offered Moolya a great opportunity to work for KGFVerse. It is India's first ever metaverse project conceived and launched by Hombale Films Productions as a promotional event for the release of the film: K.G.F Chapter 2 in association with BookMyShow.

Each team and every business we work with comes with their own challenges, and their own story. One such story in the making as we post this is our journey with Collibra. Collibra is a data intelligence and data governance company based in New York, USA. They aim to “make data meaningful” and ease out complex data management.

LIFE AT MOOLYA

A culture built on empowering people.

In Moolya, teams are encouraged to take calls and deliver in an autonomous manner. We concentrate on providing people with the tools they need to deliver and then let them take it from there. Moolya is a place where you can look forward to being free, to being responsible for your actions.

Our Talk Series

Continuous Trust by forward-thinking teams around the world

Mootalk with Guardianlink ft. Jump.Trade

With the IPL scene heating up the Cricket NFTs

With the IPL scene heating up the Cricket NFTs are dropping like Sixes and Fours. But do you know what NFTs are? Catch Mootalks as we discuss the basics of NFTs and why we all should be the part of this dazzling future.

Mootalk with Guardianlink ft. Jump.Trade

The Second Episode of Mootalks discussing how essential it is to build a sense of Community amongst NFT enthusiasts and what can you do after acquiring a digital asset in this enthralling conversation between Abilash and Neha from Jump.Trade